The world is not prepared for the scale of new technology in our future unless we bring diverse voices from a vast range of disciplines to the table.

Let me tell you a story about a time when the power of computing seemed limitless. When the first question everyone asked was: Would these computers be intelligent and think, like us?

It was a time when more and more data was being generated every day; when the conversations were about what to do with all that computing power and data; and about what this would mean for how the world could – and should – be.

It was a time when governments, universities and companies were all competing to take advantage of recent innovations. Everyone worried about the future, but also they wondered about it.

That time was 1946.

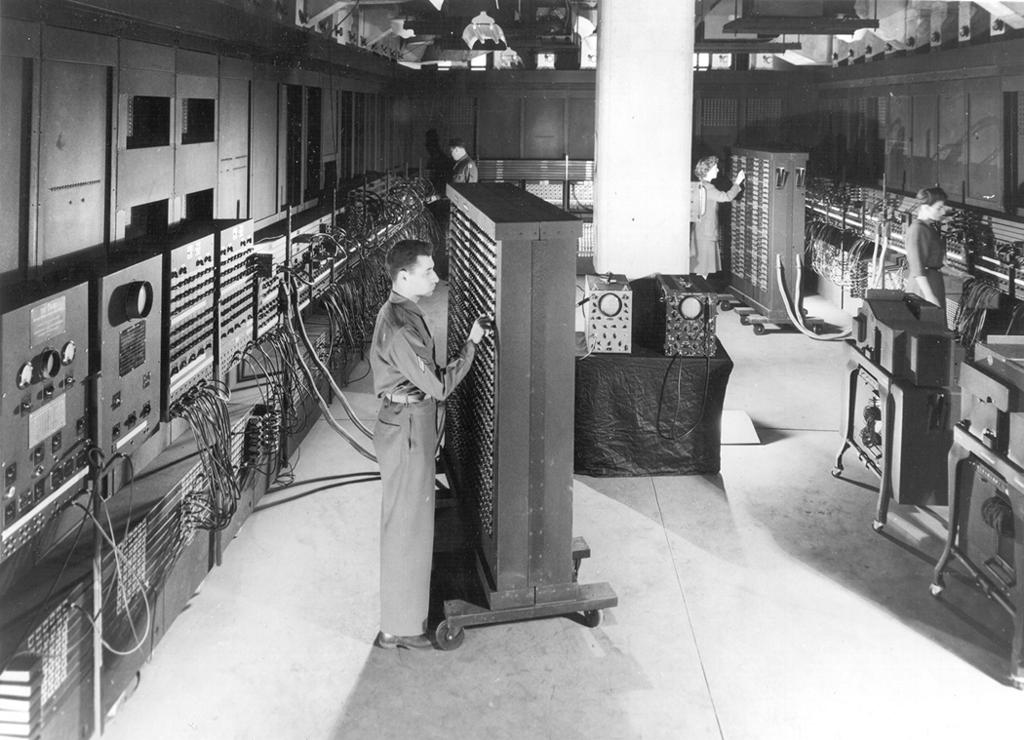

Every month brought new breakthroughs. Things that had been theoretical were suddenly possible. The world’s first general-purpose computer, the ENIAC (Electronic Numerical Integrator and Computer) had just come online.

Alan Turing created the design for the first stored-computer program. Vannevar Bush had recently published “As we may think” and asked readers to: “Consider a future device … in which an individual stores all his books, records, and communications, and which is mechanized so that it may be consulted with exceeding speed and flexibility. It is an enlarged intimate supplement to his memory.”

A mathematician and philosopher named Norbert Wiener pioneered the idea of the feedback loop, helping shape systems engineering and developing the core theories in systems and control theory. He is well remembered for this work.

Electronic Numerical Integrator and Computer (ENIAC) in the 1940s. It was the first large-scale computer to run at electronic speed without being slowed by any mechanical parts.

For a decade, ENIAC may have run more calculations than all humankind had done up to that point.

In 1946 Weiner coined a new term – “cybernetics” – and created a whole new area of study and a whole new way of looking at the world. He believed the world would mould to become a whole new kind of feedback loop of biological, technical and human systems. We would make new, smart, thinking systems.

Making cybernetics real, however, required more than just a name. It required conversations, champions, and framing questions in such a way as to reveal the edges of the cybernetics potential.

From 1946 to 1953 a series of conferences took place to try to do just that. Created and curated by anthropologists Margaret Mead and Gregory Bateson, in collaboration with Wiener, these conferences, later known as the Macy cybernetics conferences, took place in New York.

And the participants were strikingly diverse – there were mathematicians and philosophers, physicists and psychologists, anthropologists and historians, hailing from North America including Mexico, Europe and Asia. They were at different points in their careers and they had different lived experiences.

What Mead, Bateson and Wiener knew was that building something new like this was not a task for one scientific discipline. It could, in fact, only be built in the intersections between fields and existing ideas.

Cybernetics or AI?

In 1953 the Macy Conferences came to an end but the conversations didn’t.

And in 1956, 10 years after Wiener had coined the term cybernetics, a new term would come to take its place: “artificial intelligence”. This, too, was born at a conference of sorts, the Dartmouth Summer Research Project. The proposal for the project stated that:

“The study is to proceed on the basis of the conjecture that every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it.”

The future was being built, again, but this time with fewer voices, and fewer questions. This group, it seemed, already knew the answers – make a machine that can think like a human.

Indeed, many ideas were lost in the transition from cybernetics to artificial intelligence, such as the idea of dynamic systems that included technology, people and ecology; the idea of feedback; and the idea that the world you were making should be subject to critical inquiry.

The thing about ideas, though, is that they move, grow and adapt. Your ideas will always end up in someone else’s hands and, when they do, you hope you gave them enough grace and enough shape to hold up.

Mary Catherine Bateson, daughter of Gregory Bateson and Margaret Mead, wrote of the moment cybernetics gave way to artificial intelligence:

“The tragedy of the cybernetic revolution, which had two phases, the computer science side and the systems theory side, has been the neglect of the systems theory side of it. We chose marketable gadgets in preference to a deeper understanding of the world we live in.”

Our possible futures

So why is this story important to engineering and its possible futures?

Well, because right now we are living in a time when the power of computing seems limitless.

We are still gobsmacked by the volume of data generated every day. Our conversations are still about what to do with all that computing power and all that data.

Right now, governments, universities and companies are all competing to take advantage of recent and forthcoming innovations.

And everyone is worrying for the future, and also wondering about it.

I believe engineers and engineering offer us a way to navigate that future. But to do that successfully, we will need to change and adapt.

An historical analysis of cybernetics and the Macy Conferences reveal that the early conversations were characterised by a systems approach to how society should integrate and develop artificial intelligence, focusing on how humanity might be enhanced.

Economic benefit was a secondary consideration, in stark contrast to how AI applications are positioned and employed in the majority cases today.

This lesson tells us about innovation and impact on society, and about the need to keep everyone in the loop. Not just the technical loop, but the societal one, too.

The good news is that engineering has always been about precisely this. The whole history of engineering is about a body of knowledge that grew up around the need to keep humans safe as technical systems achieved increasing degrees of scale and scope.

So really, engineers have never been more relevant or more necessary.

Some 200 years ago, the rise of trains and railways required engineers to help ensure steam engines could get to scale safely; we need engineers and their allies to help bring artificial intelligence safely to scale.

It takes a village

The world is moving at the speed of light towards a more technologised state. We can call it the digital revolution, the fourth industrial revolution, or the computer age (if you’re of a certain vintage).

I imagine this is a future where artificial intelligence goes to scale. I am not talking generalised intelligence and singularity. I’m talking about that time in between, where AI engines are sophisticated enough to get put into lots of machines and autonomous systems, and into the world around us. And start – inevitably imperfectly – making decisions that affect people’s lives.

That is a very complex future.

If we translate the skills we have today onto that future, we come up short. We have lawyers who are trying to regulate configurations of technologies they don’t understand. We have computer programmers who are coding algorithms for machines they are not sure how will act in response to algorithmic output. And we have engineering teams trying to put autonomous vehicles on roads who don’t understand the social and political context of transport.

Genuine, society-wide innovation is different to making gadgets. It is hard work. And it is better with more voices than fewer.

I believe that the world needs a new way of thinking to tackle these coming challenges.

I think the answer lies with a re-imagining of cybernetics and re-capturing the systems theory core of that nascent conversation. This new body of knowledge would be more closely tied to Wiener’s notion of cybernetics, which, for all its madness, can be re-interpreted for the 21st century in ways that we desperately need.

The practitioners in the new field will have to be able to ask the right questions. They will integrate scientific insights across disciplines examining intelligence, including computer science, systems theory, psychology, anthropology, the law and so on.

And they must use them in a practical decision-making framework that will assist them to design, build, scale, manage, regulate and decommission cyber-physical systems (autonomous systems super-charged by AI and the Internet of Things).

When we build, every step of the way is a decision with real-world impact. We must equip our engineers with the skills they need to bring in diverse voices and make good decisions at every point.

We need thinkers who see the whole.

Genuine, society-wide innovation is different to making gadgets. It is hard work. And it is better with more voices than fewer. It is part luck, part hubris. A willingness to fail a little, to be reborn as something else. It is also persistence and purpose.

I hope we are up for the challenge!

Distinguished Professor Genevieve Bell FTSE

Director of the 3A Institute and Florence Violet McKenzie Chair at ANU

Distinguished Professor Genevieve Bell is the foremost global leader in applying social sciences and cultural anthropology to technology development and digital transformation. With a background in cultural anthropology, she has an unparalleled reputation as a leader at the intersection of technology and social sciences, and the impact of digital transformation. Her combined industry and academic impact are creating new fields at the intersection of social and technology sciences. She has been a globally recognised champion of diversity and gender equity, and a practical mentor and supervisor to colleagues and students.